Phase 1 - Displaying Transcription Subtitles

The first phase of GSoC 2018 is coming to an end. Let me share my experience and contributions so far with this blog post :)

What did I work on?

My main task in this phase was to work on Jitsi Meet which is the repository containing the front-end client of the application.

Existing Code

One of my mentors Nik Vaessen worked on Integrating Speech to Text as a GSoC student in 2016 and 2017.

Nik made a pull request at the end of his coding period last summer for rendering subtitles in the form of a React Component. I picked up from where Nik had left off and continued working on it.

My Work

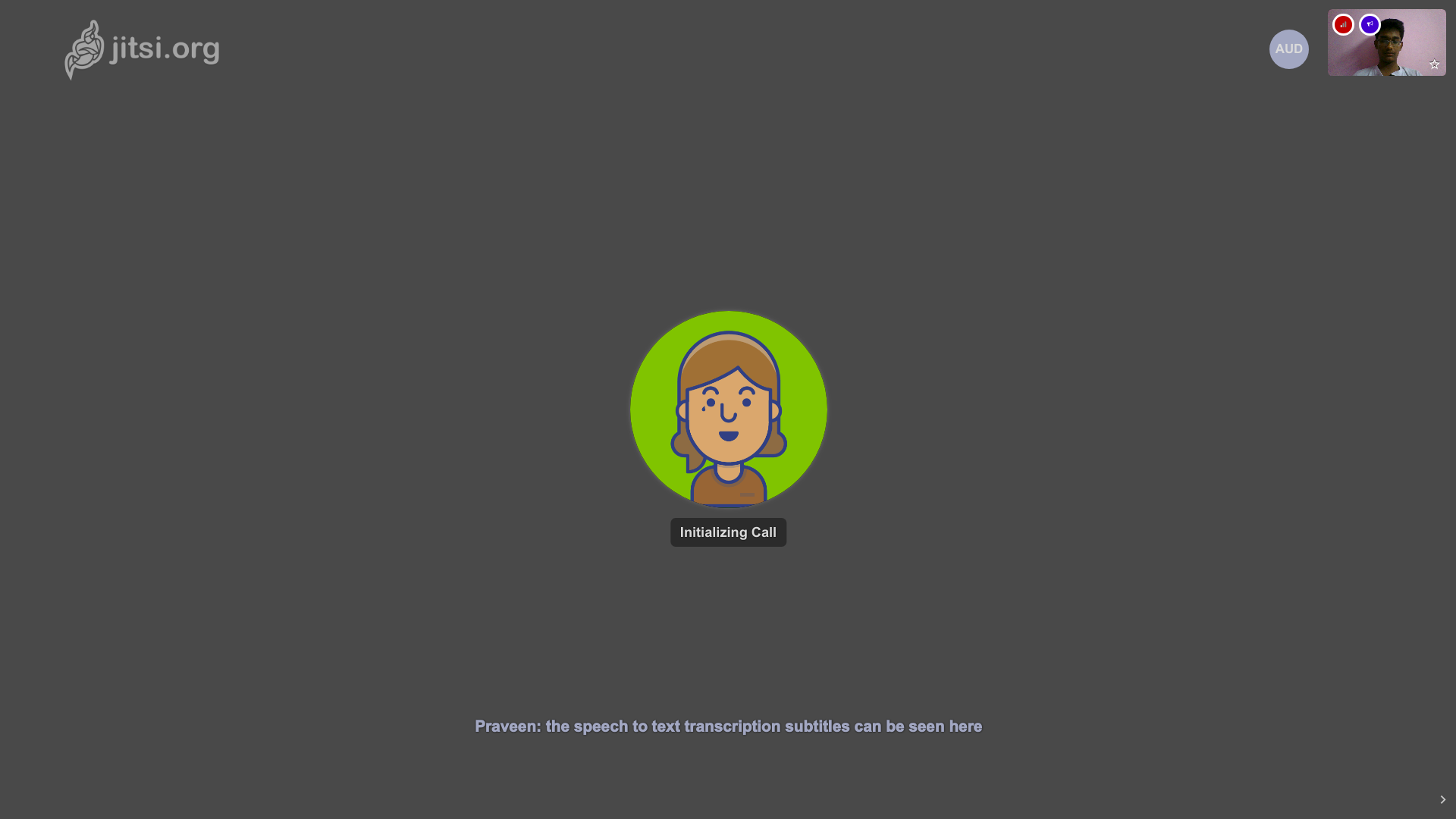

I worked on the transcription feature to enable displaying (nearly) real time transcription subtitles conference. So, currently the subtitles can be seen as follows :

I made a couple of pull requests to my mentor’s fork :

whose changes reflect in the pull request in the main repository :

https://github.com/jitsi/jitsi-meet/pull/1914

Technical aspects - How things work ?

Jigasi - JItsi GAteway to SIP implements a speech to text service using Google Cloud Speech to Text and acts like a transciber by joining the meet room as a participant. During the meeting Jigasi will receive the audio chunks ofthe participants, forward them to the Google API and send the transcribed message back the the participants in the JSON format seen below.

{

"transcript":[

{

"confidence":0.0,

"text":"Testing Speech to Text"

}

],

"is_interim":false,

"language":"en-US",

"message_id":"8360900e-5fca-4d9c-baf3-6b24206dfbd7",

"event":"SPEECH",

"participant":{

"name":"Praveen",

"id":"d14c8f32"

},

"stability":0.0,

"timestamp":"2017-06-24T11:04:05.637Z"

}

This payload, once received by the conference, triggers an ENDPOINT_MESSAGE_RECEIVED event listener. This listener dispatches the endpointMessageReceived redux action. The middleware function of the transcription feature listens for the endpointMessageReceived action and is responsible for using the received payload to update the subtitles. The Redux state of features/transcription contains a Map transcriptionSubtitles of key value pairs which maps the message_id with the text.

As the audio streams are continuously being sent to the Google API, we need to know when a continuous stream has ended and interpret the next one as a new message. For this to work, we use the detailed information received from the Google API which includes a field telling us if the particular transcript is an interim result or a final one.

In Jigasi, we use the same UUID as its message_id to denote a particular message until we recieve a final stable message.

If the received message_id is not one among the keys in the Map, a pair of message_id and the participant name is added to the Map.

There are 3 scenarios when a message_id exists in the Map :

- If it is a final message, it is added as a final component in the Map value and a timeout is used to dipatch a

REMOVE_TRANSCRIPT_MESSAGEaction which removes the key,value pair from the map after a defined period.. - If it is an interim message with high stability, it is added as a stable component in the Map.

- If it is an interim message with low stability, it is added as an unstable component in the Map.

While displacying the particular message in the Component, either the final message is used as the text, or the stable and unstable components are concatenated and used as text.

TranscriptionSubtitles.web.js Component uses the Map as a Prop to re-render the transcription text paragraphs every time it gets updated and displays it accordingly :)

Challenges faced and Learning Outcomes

- Large Codebase: Became familiar with the codebase and the different components involved.

- Basics of XMPP: Learnt more about the XMPP standard for presence and messaging.

- React-Redux: Got familiar with how a react-redux applications works

- Better version control: You can say I moved on from the add, commit, push philosophy ;)

What am I currently working on ?

Currently the transcibed message in JSON format is sent through the XMPP Message stanza encapsulated in its body as follows:

<message ...>

<body ...>

{

"jitsi-meet-muc-msg-topic":"transcription-result",

"payload":{

"transcript":[

{

"confidence":0.0,

"text":"this is an example Json message"

}

],

"is_interim":false,

"language":"en-US",

"message_id":"14fcde1c-26f8-4c03-ab06-106abccb510b",

"event":"SPEECH",

"participant":{

"name":"Nik",

"id":"d62f8c36"

},

"stability":0.0,

"timestamp":"2017-08-24T11:04:05.637Z"

}

}

</body>

</message

In this approach, we do not come to know that that the particular message received contains a JSON type message in its body unless we manually check the entire string in the message body to parse and check for a JSON type message.

This is not a very neat approach considering the fact that we are using the same data channel for sending multiple types of messages.

I am working to improve this with a packet extension in Jitsi by encapsulating the JSON body in a json-message element, so that the Jigasi sends the following XMPP Stanza :

<message ...>

<json-message xmlns=https://www.jitsi.org/jitsi-meet>

{

'type': 'transcription-result',

'transcript':[

{

'confidence':0,

'text':'Testing Stuff'

}

],

'is_interim':true,

'language':'en-US',

'message_id':'8360900e-5fca-4d9c-baf3-6b24206dfbd7',

'event':'SPEECH',

'participant':{

'name':'Praveen',

'id':'2fe3ac1c'

},

'stability':0.009999999776482582,

'timestamp':'2017-08-21T14:35:46.342Z'

}

</json-message>

</message>

So we can see that the required JSON message will be encapsulated in its own packet which can be thought of as a container to hold json strings only. After this, we can simply check for the particular container in the message stanza and interpret it as json message compared to the previous approach where one had to try to parse it first.

Future Work

Initially we decided on going with the client-side translations but after proper research of the available options, we decided to change it to server-side translations due to:

- Lack of free services.

- Requirement of authentication for the paid ones which may lead to abuse of the service and extra costs.

Server-Side Translations will be helpful as:

- More secure as only the required audio will be translated.

- It will prevent misuse of paid translation services.

- The abstract implementation will allow for easily extending it with other external translation services as per choice and a locally setup service as well.

- Ease in preparing the final transcript of the meet in the required translated language.